Final Project (IDEAS + Workflow)

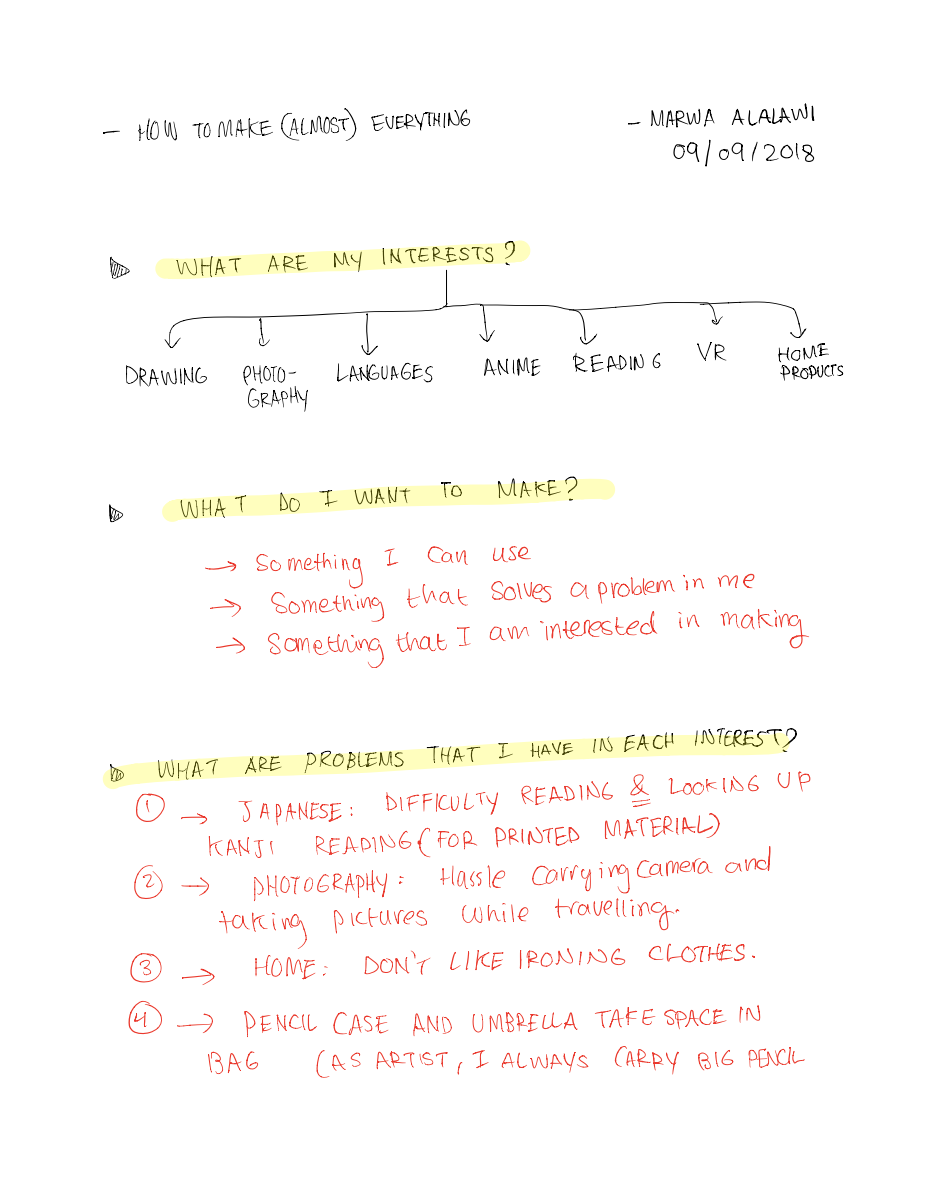

IDEAS: Deciding on an idea for my final project was such a hard thing to do. Mainly because there are so many things that I am always curious about and would like to make. So, after many ideas and sketches, I decided that I wanted to make something to solve a problem I face in my daily life, while being of interest, of course. So, I made a chart of what my intersts are, and another chart of possible problems/ inconveniences I usually encounter within those certains interests.

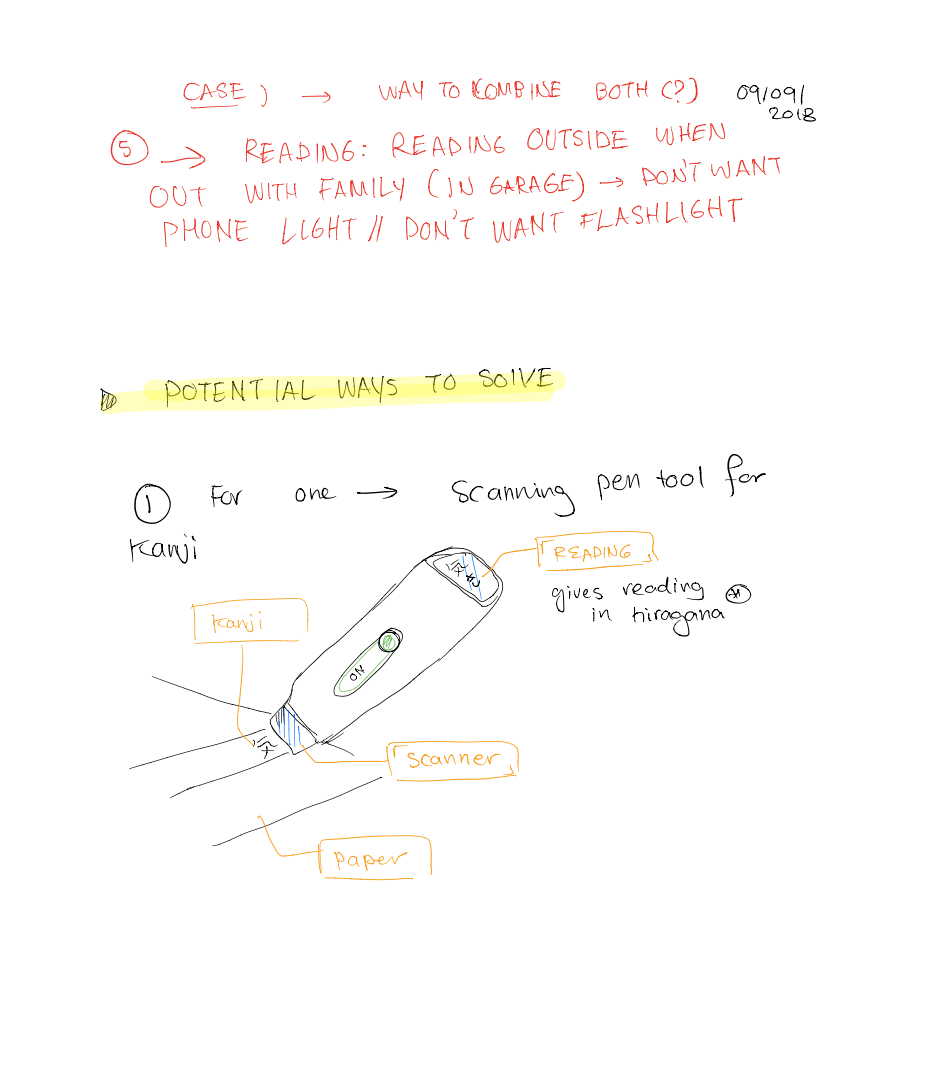

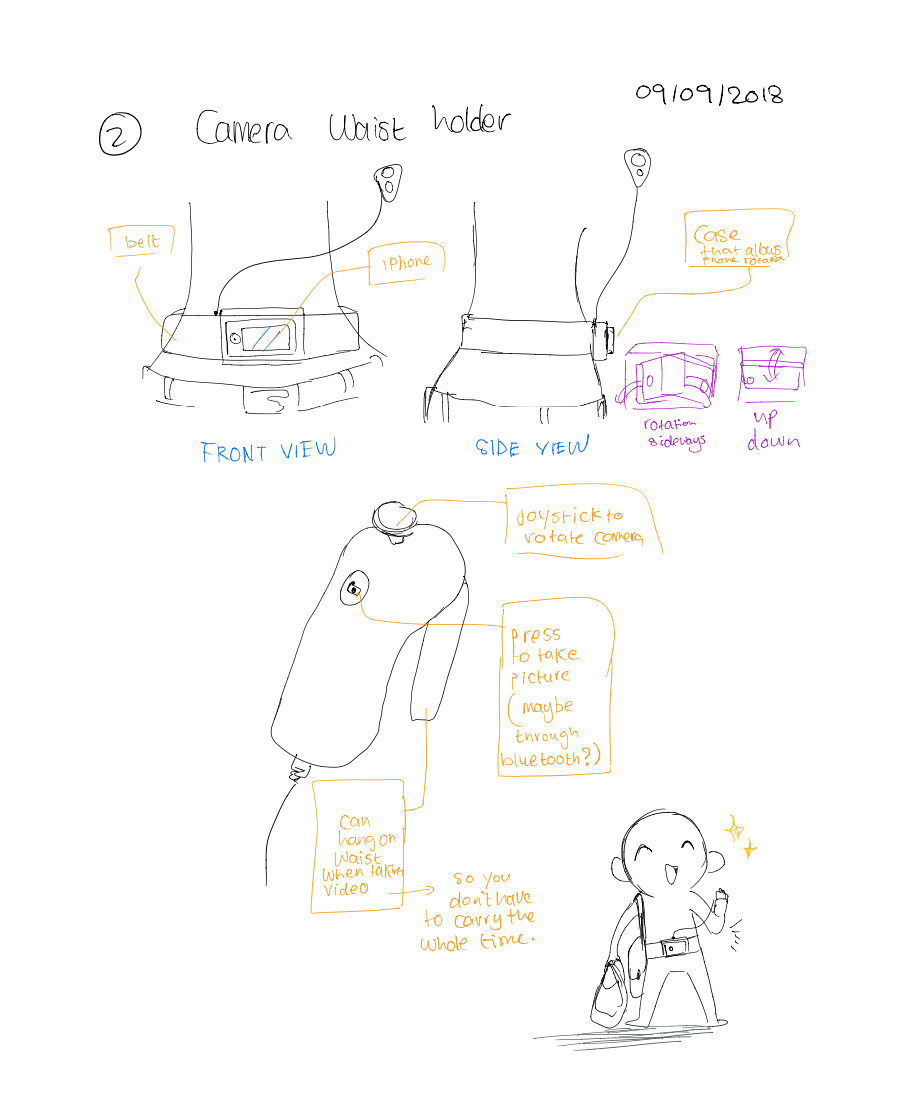

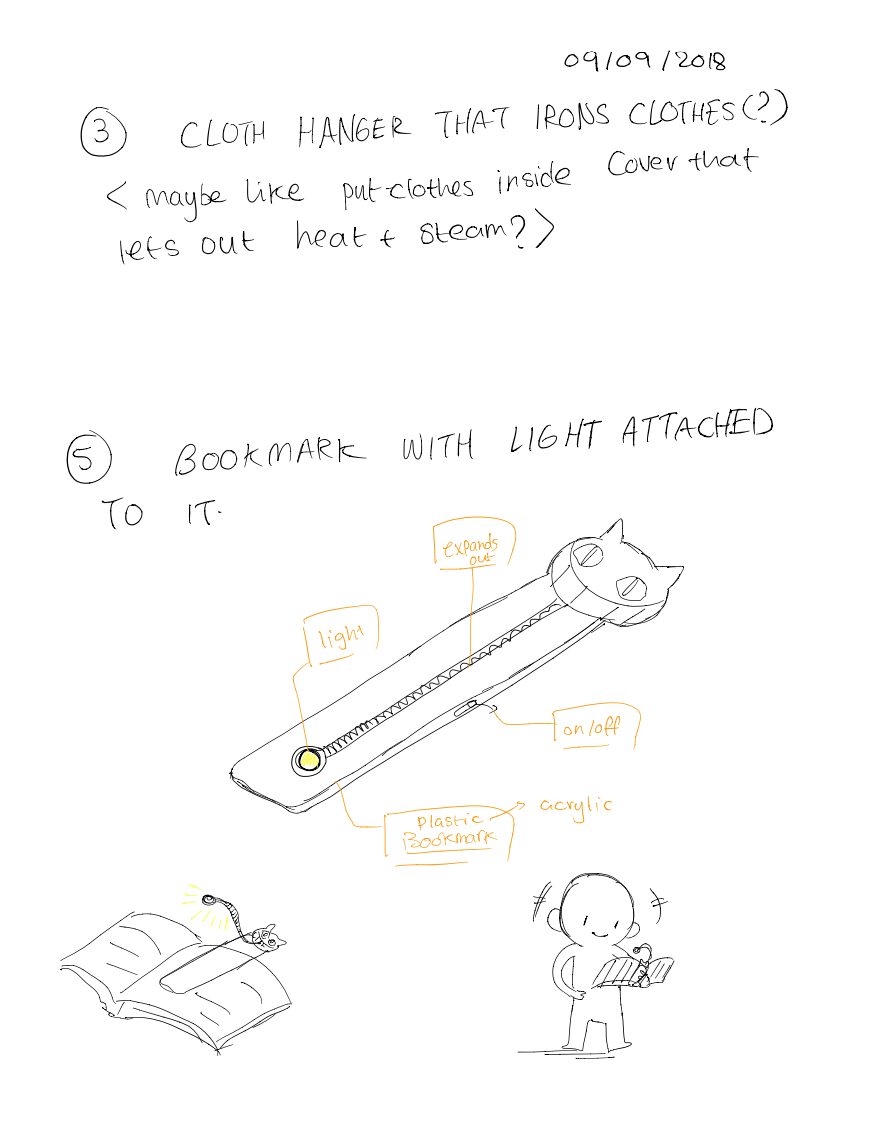

As a good starting point, I drew very rough sketches of "potential ways" I could solve these problems

I love learning languages! I speak four languages in total, and Japanese is one of them. Japanese is a very unique language compared to other langugaes in that it uses three sets of alphabets, or "writing systems" depending on the origin of the word. The three sets are: Hiragana (for native Japanese words), Katakana (for words imported from other languages), and Kanji --Chinese characters used in combination with Hiragana to give insight to word meaning. Any student of the Japanese language won't have problems memorizing Hiragana and Katakana as both are easy to read, are 46 characters each, and don't change in pronunciation according to the word they appear in. Kanji, on the other hand is 2,000 + characters that change in pronunciation according to word. Because I love reading Japanese novels, it is always daunting for me to go and look up the pronunciation or meaning of a certain kanji character or combination when I come across one I don't know. On top of that, keyboards that support kanji characters for typing only work when the pronunciation of the kanji is known-- which is not ideal for my case.

The only two availiable "time-consuming" technologies are either using the google translate application to take a picture of the text,and highlight the kanji you want to read. But this method doesn't give the reading, and instead simply gives the English translation. The other alternative is to use the iphone's chinese keyboard to write (with your finger) the kanji character, then look it up online or in a Japanese dictionary. However, sometimes the kanji writing could have multiple strokes and be overly complicated, that a user, like me, won't be successful in inputing.

So, long story short, to solve this pressing problem, my idea for my final project is: a scanning pen for Kanji characters! .

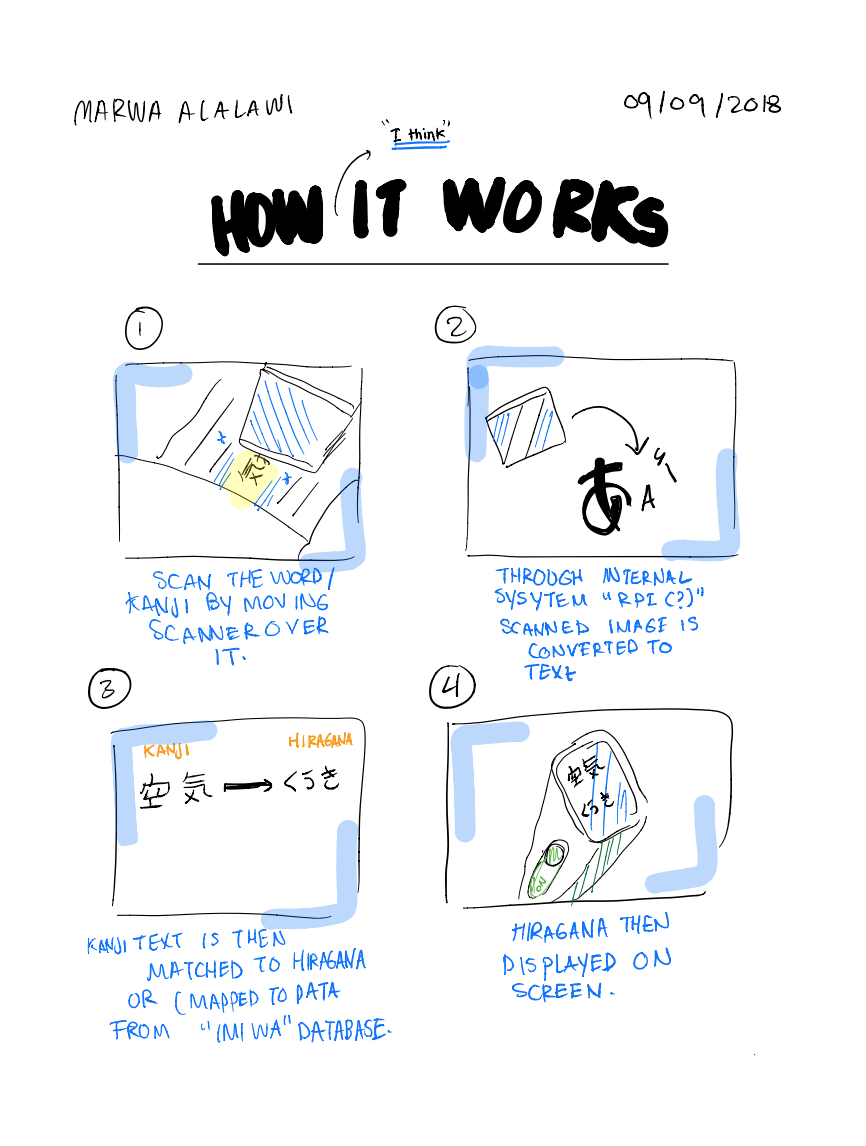

The pen works by having a scanner at its tip, where you scan the word. Then, through OCR (Optical Character Recognition), the image is transformed to text, and matched to the correct reading of the word in Hiragana.

To model this final project, I made a prelimeinary iteration using Solidworks of how the pen would look like. I like using solidworks because as a mechanical engineer, it is a platform that I am used to. It's inteface can be a bit confusing at first, but watching a couple of tutorials can help, and is definitely worth it! :)

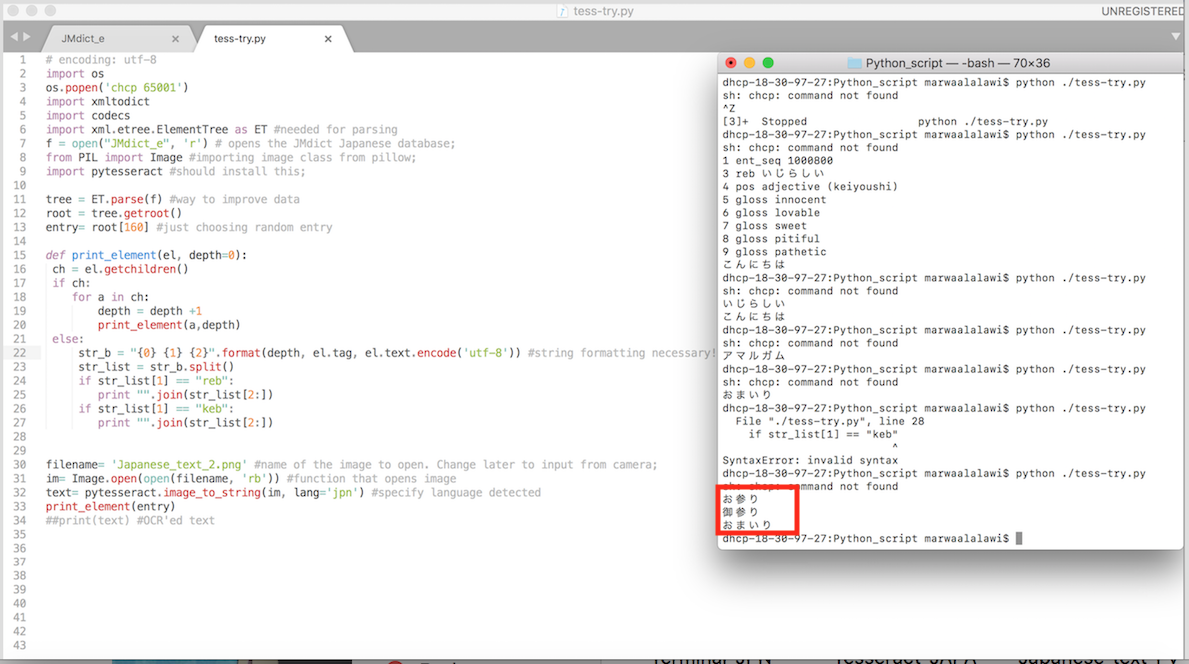

OCRS: By searching for some open source Optical Character Recognition platforms, I came across "Tesserect". I am currently fiddling with it to see how well it can detect Japanese text.

Tesserect: To get Tesseract working on my computer, I followed the instructions mentiond on its Github, which can be found here. Because I am using a mac, I did all the installation through Homebrew. It is very straightforward, so just follow the steps outlined on the Github page.

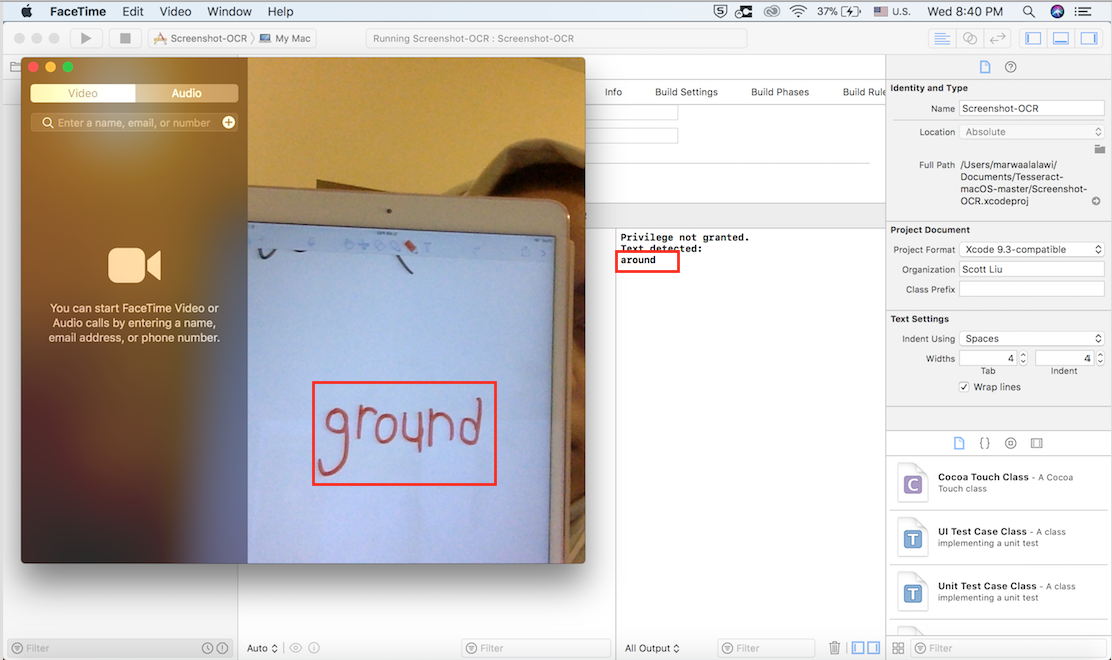

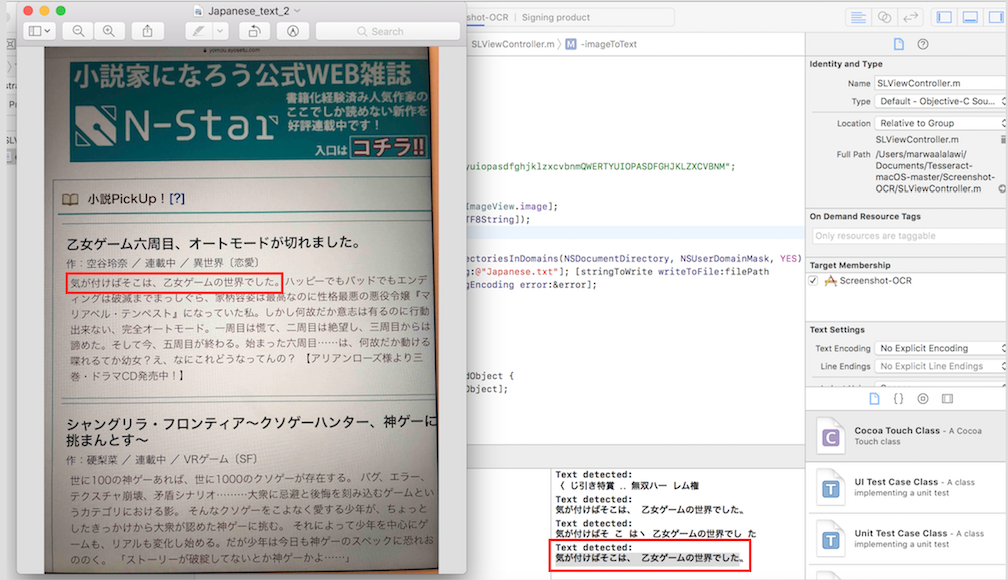

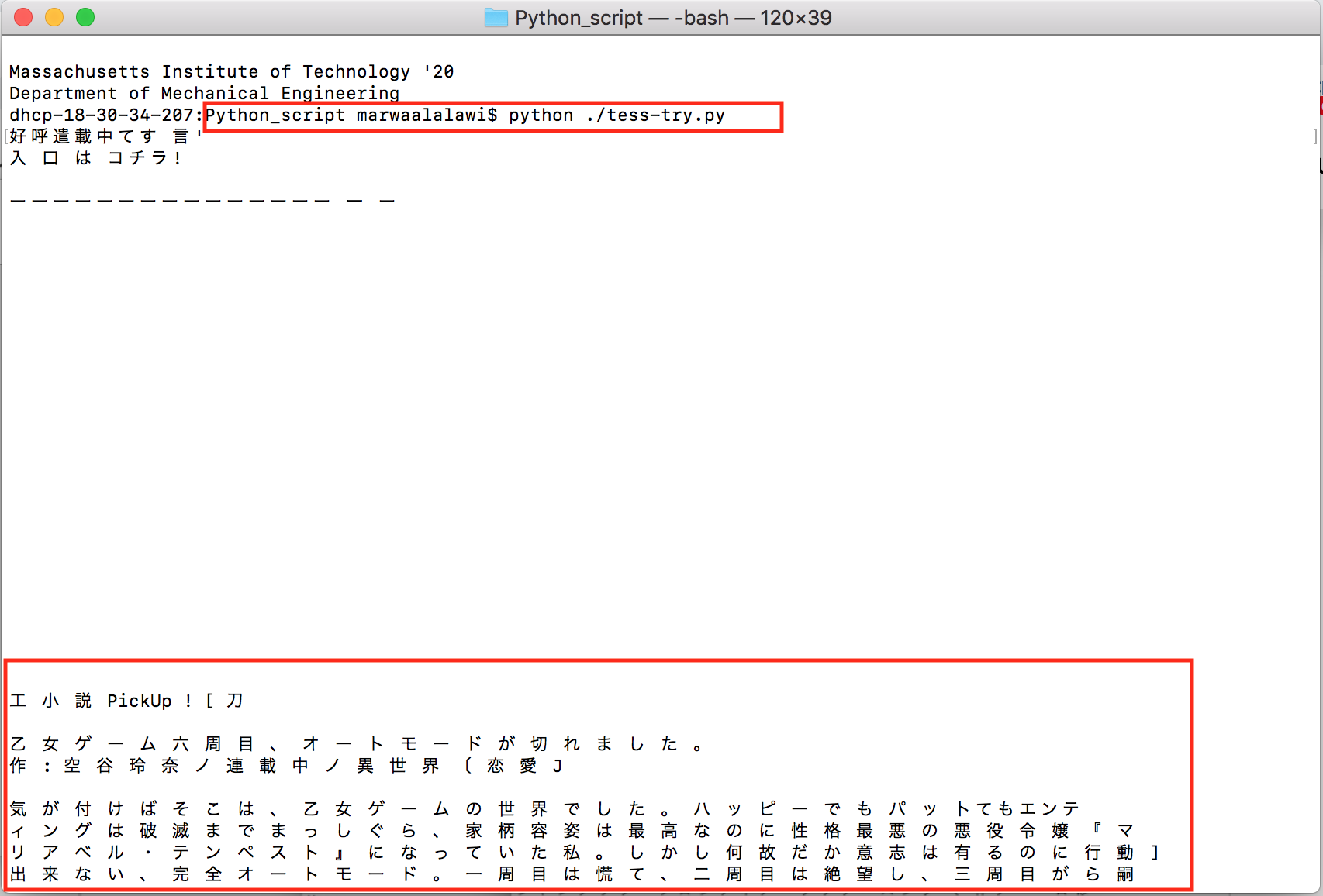

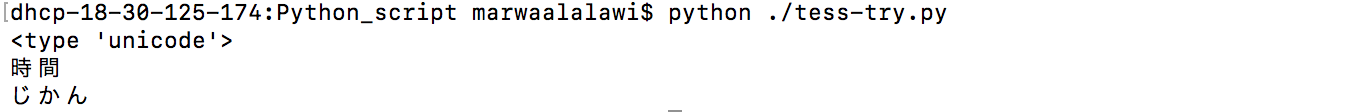

I also downloaded the "tessdata" for Japanese and vertical Japanese (because vertical Japanese is what novels use), and added them to the tessdata folder within the main tesseract folder.Since I needed to do a quick prototype to check how good Tesseract is at detecting characters, mainly Japanese Kanji characters, I used the example xcode that comes with the mac version of Tesseract. I first tested on English, and noticed that it was accurate for translating computer screenshot text images into actual text. However, I noticed that it wasn't very accurate when it came to handwritten text, which I tested by taking screenshots of my own handwriting. Useful Note, to know where the tessdata is added or tesseract is type "brew list tesseract" to see that.

Because I will hopefully be using the pen for printed material (novels), rather than hand-written material, the inaccuracy mentioned above should not pose as a hurdle.

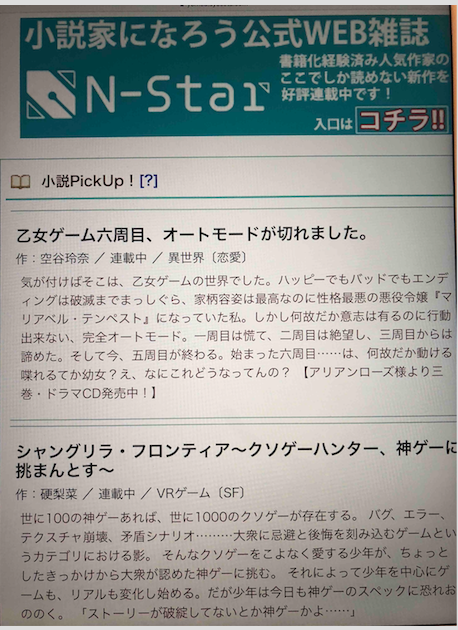

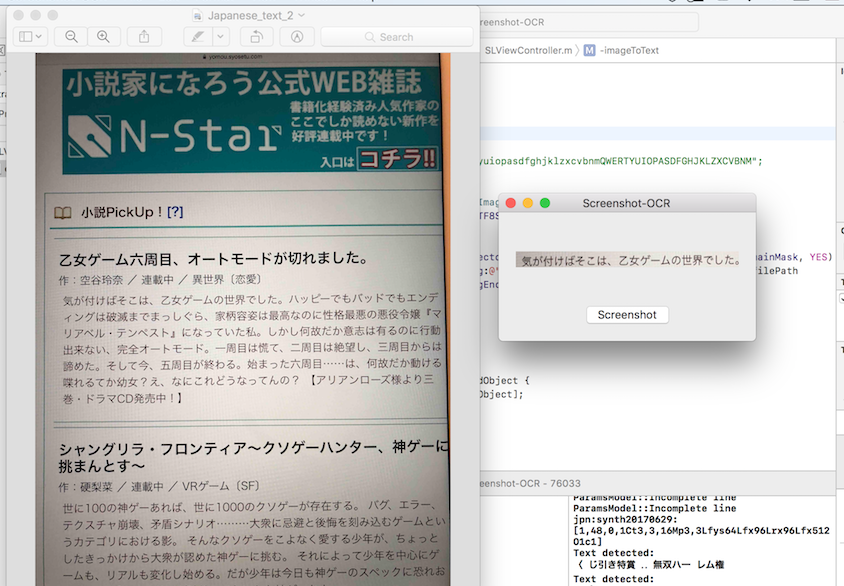

To test for Horizontal Japanese writing, I took an image with my phone of Japanese text, sent it to my laptop, then took a screenshot of it through the tesseract example code I changed from the deafult English text detection to Japanese detection. Note that to do that, you would have to go the file SLViewController.m and within the function ImageToText change the ocr.language from "@eng" to "@jap" for horizontal Japanese text.

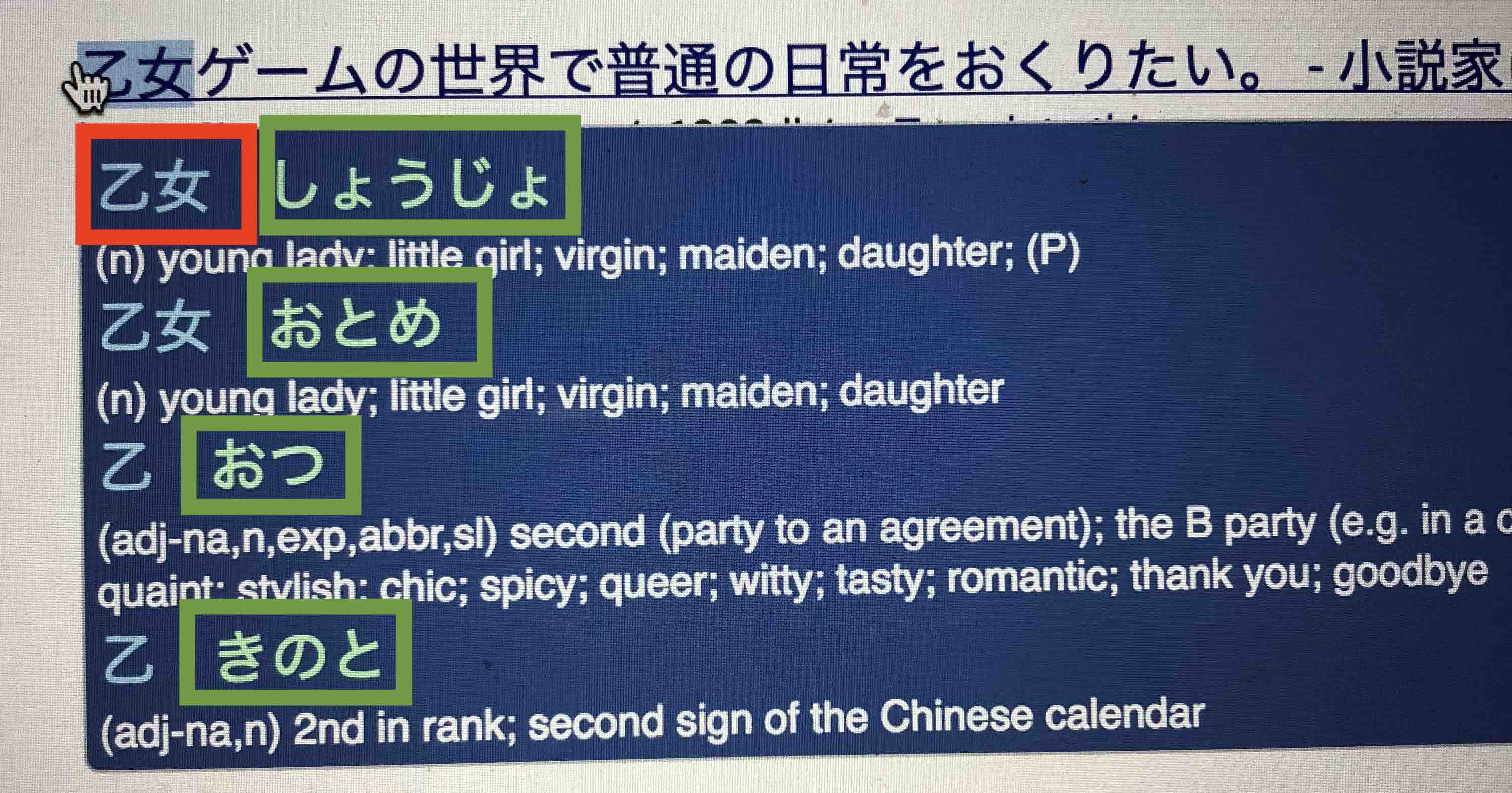

As I have mentioned in the previous (very long ago) section of this page, Google has a wonderful extension that basically produces the output that I would like my scanning pen to do (only limitation is that it does it for pure text, and not for images). That is, provide the reading of the Chinese (Kanji) chracters in the form of native Japanese characters (Hiragana). This google extension is called Rikai-kun. The picture below illustrates what I mean. Highlighted text (as wel as one bordered in red) is written in Kanji. Text in blue bubble and bordered in green is the Hiragana reading of the Kanji.

Rikaikun Google Extension: After some searching online, I found that Rikaikun is actually based on a free open source Japanese Dictionary called JMdict/EDICT

Setup:

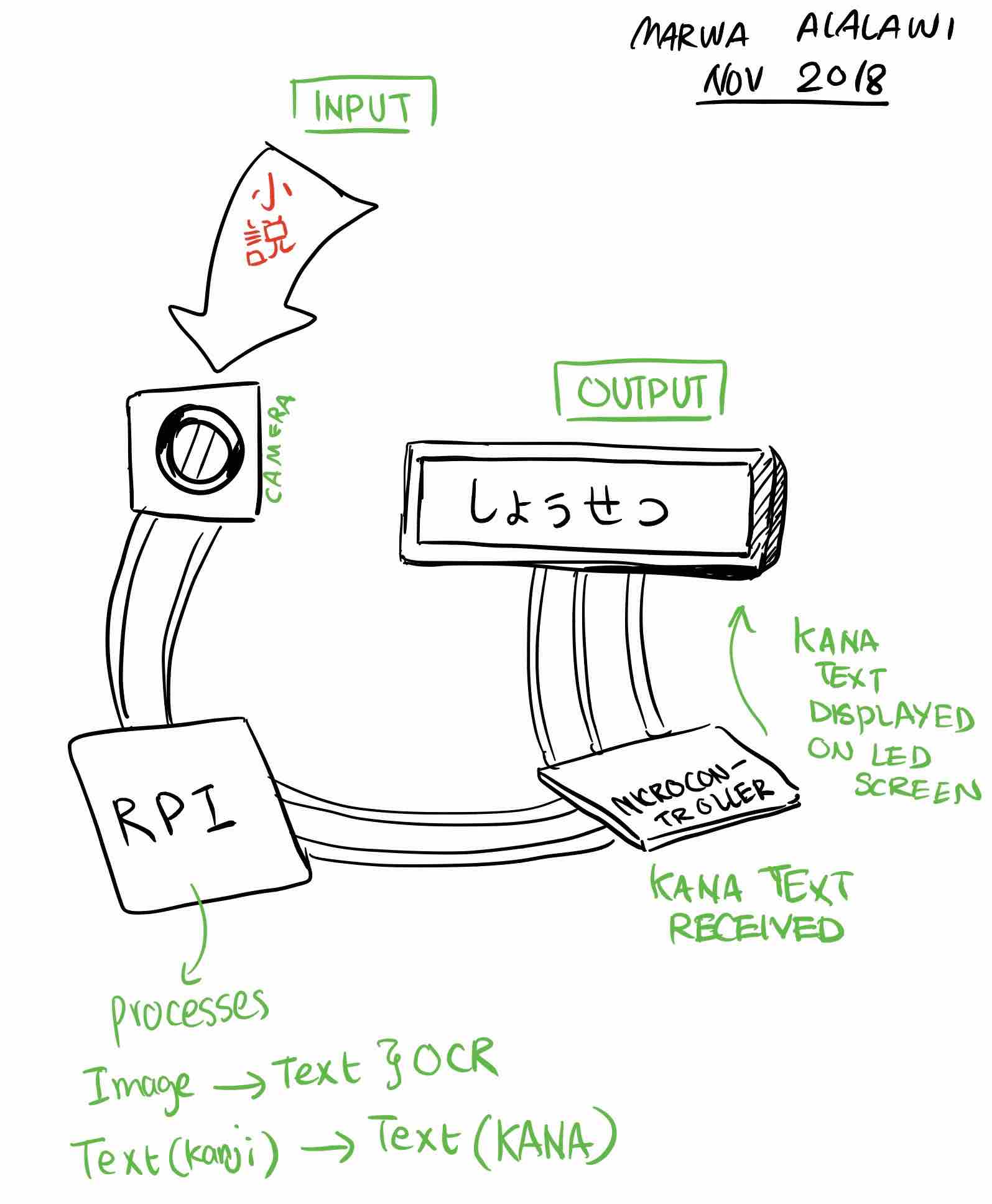

I am thinking of using a RPI zero, a microcontrorller that I build by myself, a camera, and an LED screen. The LED screen would connect to the microcontroller, and the microcontroller would talk to the RPI. The database would be in the RPI. My idea is that when a picture is taken, it is sent to the RPI, which in turn uses OCR to produce text and translate it. It then would communicate with the microcontroller and send it this text, which the microcontroller would then display through the LED screen. I am thinking of something along the lines of this tutorial, except that I don't plan on building a C# interface with visual studio.

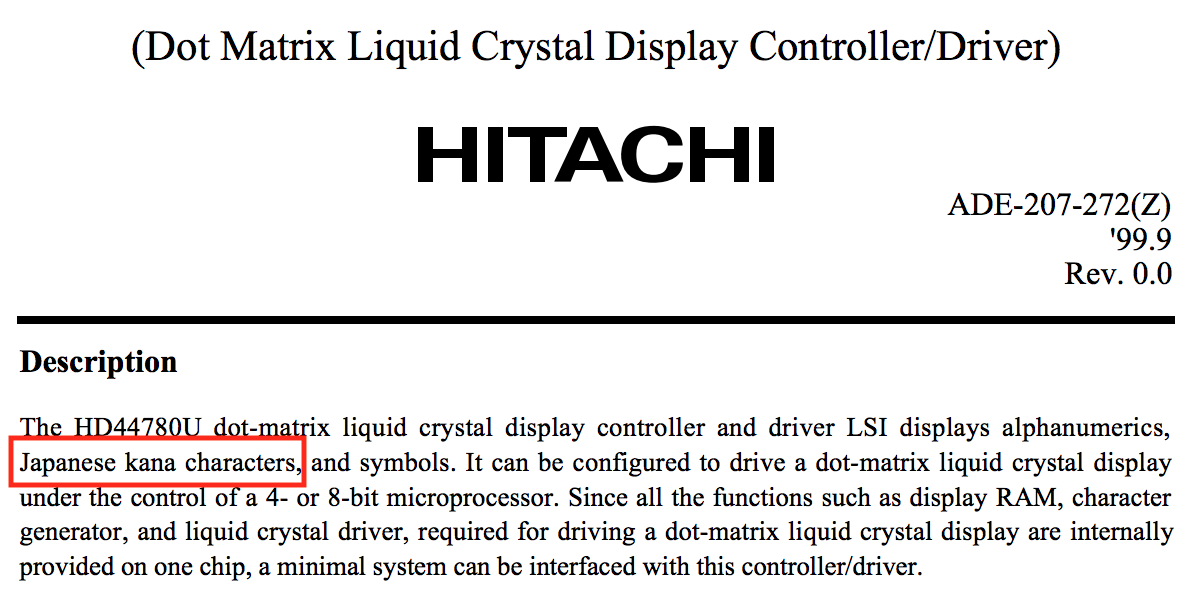

On the fab website, the suggested LCD is a Hitachi, and supports KANA characters, which is good for the purposes of this project.

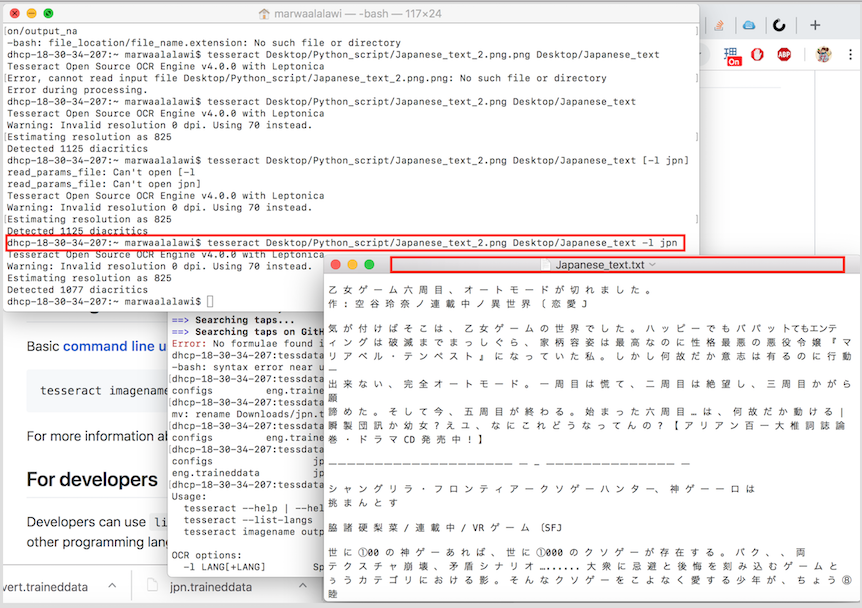

To run Tesseract through the Command line in Terminal. Basically use the following commands. (This is assuming that Tesseract is installed). Note that the default detected language is English. Also note that the deafult output for Tesseract, if directory is not specified, is the deafult home [Can be acessed on a mac if you click on Finder==> Go==> Home].

Note that the deafult format for output with tesseract is "txt". Also note that if your directiories or file names have "spaces" between them, use "" to encapsulate them.

Python & Tesseract: I researched which coding platform would work best with RPI and Tesseract for OCR purposes, and found a good number of tutorials/pages on how to combine these three elements together. To use Python with Tesseract, we first must install a couple of dependencies. Run the following code in your terminal window:

If you do not have a python installed, and are using a mac, then run the command "brew install python". That is also assuming that you have homebrew installed, which if you don't==> run the command (/usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)")

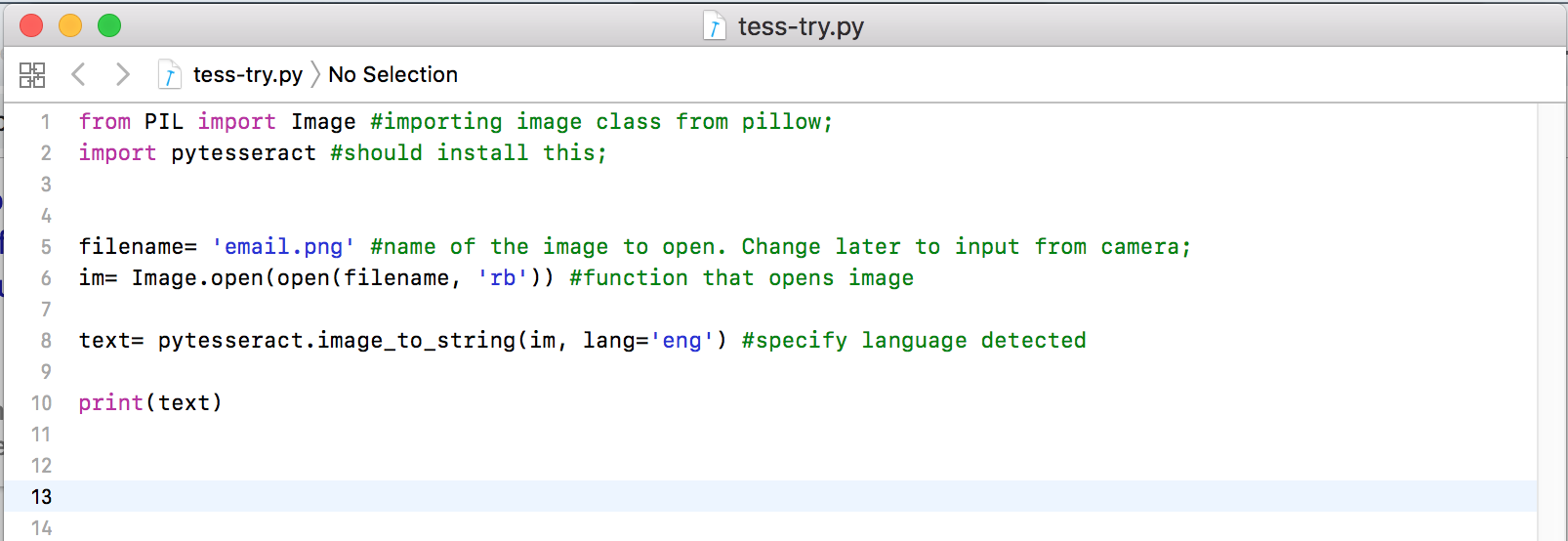

I created a simple python code as a draft. The python code currently works for English, but pytesseract hasn't been set for Japanese yet, which is why if I insert an image in Japanese text to it, I will receive an error message. If you want to create a python file from terminal==> "touch file_name.py". This creates a file for you on your user Desktop

To run python code from command line==> python ./code_name.py

TOUBLESHOOTING AND BUGS: So, To actually install tesseract and have the languages all installed through terminal correctly, I did the following. I Install Tesseract like what I outlined earlier, and then typed the following in terminal:

This allowed me to see where tessdata directory is located; For me, it happened that my tessdata is located in the following directory==> cd /usr/local/Cellar/tesseract/4.0.0/share/tessdata/

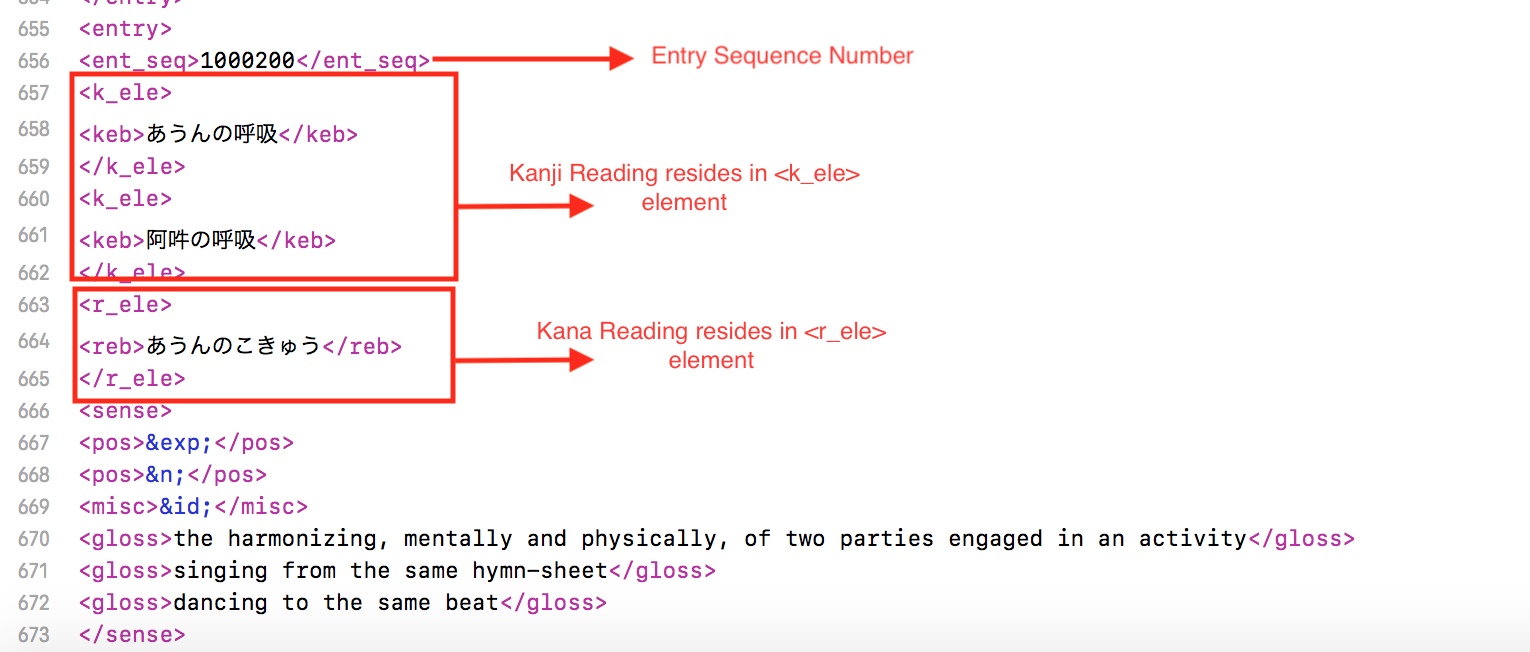

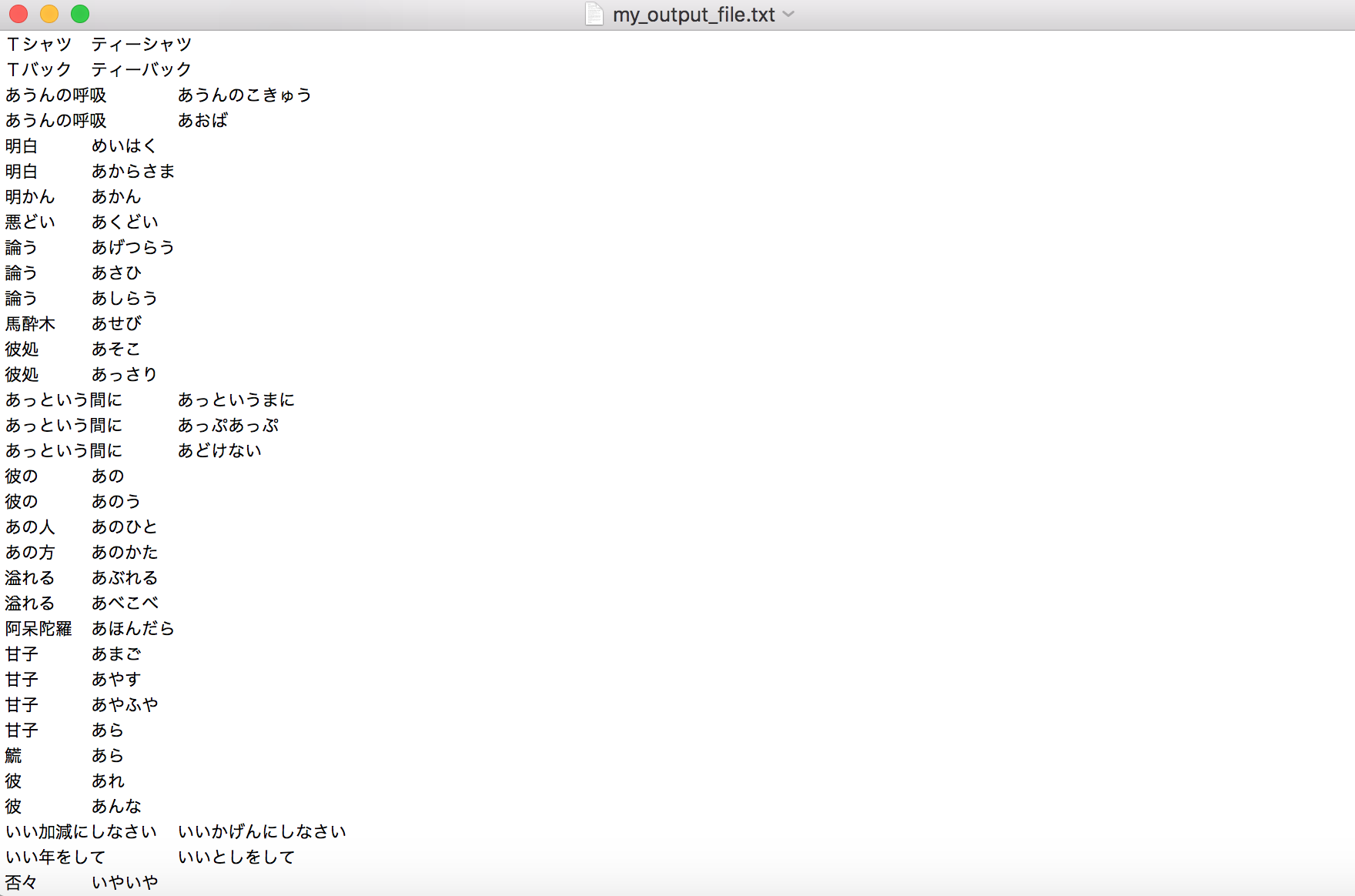

Mapping Kanji Text to Database: I downloaded JMDICT, and will use it as my database since it includes KANJI mapped to KANA. I looked at this tutorial to help me set off.

Parsing: The JMDICT database is structured in a way such that each entry contains a structure of elements, which means that if we would want to access the entries and the elements within them, we would have to "parse" data according to this page.

As a prototype for the code/database combination, I wrote a code where I would randomly select a Japanese entry in the database, and only have the Kanji and Kana readings be printed. I ran into some difficulty breaking up the strings (since xml is just wierd), so my friends Sarbari and Haripriya (course 6) helped explain, and guide me through. Notice how in the picture the Kanji and kana are both printed.

Final Code: I was able to successfully extract the kanji and kana elements using beatiful soup. The following code was only run once so that it creates the new database for me. The next step is to use this database and make it an even more useful format using Pandas.

Creating Database columns with Pandas: My freind, Haripriya, recommended that I use Pandas for database easy access reasons. I currently congergated my data into a python dictionary, and have this iteration of code running for it to return the Kana reading from a kanji detected by Tesseract.

Communicaton Between RPI and AVR ATTINY44 The idea is to have a button that controls the camera, and an LCD attached to the AVR ATTINY44 microcontroller I'll make. The camera is connected to RPI. However, for the camera to turn on, and for text to be displayed, communication is required between the two. I looked at this tutorial to know how to start about.

Links to look at later:

https://www.arduino.cc/en/Tutorial/HelloWorld

https://ernstsite.wordpress.com/page2-2/attiny85-and-rpi/

https://www.raspberrypi.org/forums/viewtopic.php?t=150524

https://www.instructables.com/id/Tutorial-Communication-between-a-RaspberryPi-and-a/

.