1.1 What the program can do

I'll introduce my question-answering project by giving an example that demonstrates what it does. The scenario is as follows:

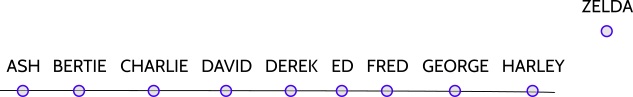

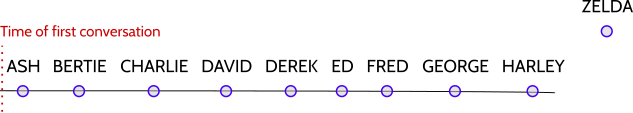

Scenario: Someone's hosting a party with a number of guests who are expected to arrive in a certain order1:

In other words, Ash will arrive first, followed by Bertie, Charlie, David, Derek, Ed, Fred, George, and Harley. One of the invited guests, Zelda, won't be coming.

Before the party begins, you decide to have a conversation with the host. Here's a transcript. (The computer program is assuming the role of party host.):

Is Harley coming? > YES. Is Ash? > YES. Is Zelda? > NO; SHE NEVER WILL. Did Ash arrive safely? > SINCE WHEN? When David gets here, will Bertie have arrived? > YES. Will Ed? > NO. NOT BY THEN. Will Charlie just have? > YES. Will Harley turn up after George? > YES. Just after? > YES. Will Ash? > NO. When Zelda gets here, will Ed have arrived? > ZELDA NEVER WILL

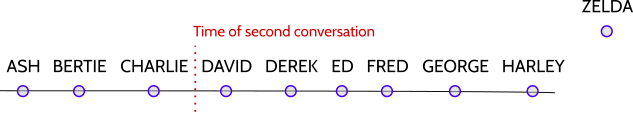

Later in the party — after Charlie arrives, but before David does — you have a second conversation:

Is Ash coming?

> NO; SHE ALREADY HAS.

Is Ed?

> YES.

When Fred gets here, will George have arrived?

> NO; NOT BY THEN.

Has Fred arrived since Ed got here?

> ED HASN'T YET.

This performance has several noteworthy features:

- It's about time. The program can understand questions about temporal events, and can reply using appropriately-tensed responses.

- The conversation accumulates context Notice how naturally the conversation flows; the question "When David gets here, will Bertie have arrived?" is followed immediately afterward by "Will Ed?" (rather than the more explicit "[When David gets here, ]will ed[ have arrived]?"). The program makes such everyday ellision possible by storing the conversational context.

- The program handles mistaken questions. There are questions whose answer is no ("Will Ed?" "NO."), but more importantly there are questions whose premises are mistaken ("Has Fred arrived since Ed got here?" "ED HASN'T YET" or "When Zelda gets here, will Ed have arrived?" "ZELDA NEVER WILL"). One of the primary goals of this project was to design a system that gracefully handles questions with malformed assumptions; after all, we humans readily know what to say when such questions occur in everyday life.

- The responses say just enough. Notice how the computer responds to "Will Ed [have arrived]?" ("NO; NOT BY THEN") — it supplies the extra phrase "NOT BY THEN" to reassure us that Ed will, in fact, be coming at some point after David. Or compare the response to "Is Zelda [coming]?" ("NO; SHE NEVER WILL") to "When Zelda gets here, will Ed have arrived?" ("ZELDA NEVER WILL"). Here, a pronoun will do in the first case but not in the second; in general, a name is required to distinguish which of the two people (Ed or Zelda) will never arrive. 2 See also: "Has Fred arrived since Ed got here?" "ED HASN'T YET".

- Replies are generated, not canned. One underlying feature of this program is that it assembles its replies from semantic information about tense, aspect, polarity, agent, and so on; responses are not simply looked up in a table. Thus an additional feature of this program is its ability to generate tensed english sentences from semantic desiderata.

1.2 What I aimed to do

In ordinary conversation, we humans are adept at understanding and answering questions about what has, or might, happen. For this project, I set out to create a computer program that can converse naturally about what has happened when.

From the beginning, I was inspired by Winograd's work in blocks world and Longuet-Higgin's book Mental Processes (on which this project is based). I wanted first of all to make a language-understanding system that didn't simply produce trees out of sentences (which statistical parsers can do even on nonsensical input), but rather one which used language as part of acting competently in a particular domain.

In short, I wanted language-understanding to serve a particular purpose, to be directed toward a particular goal.

When I selected time and tense as the domain of expertise, several more goals presented themselves: At a high level, I wanted the computer to converse "naturally". This led to two concrete goals: I wanted the system to behave conversationally, with a reasonable sense of where the conversation has been, and of what words can be elided. And I wanted the system especially to handle malformed questions — questions which are innocuously well-formed, but at odds with the facts. To handle those questions, I felt, would be the mark of graceful, conversant question-answering.

As far as these goals are concerned, I've handled them pretty well. The system still has a few bugs in places — situations which it handles differently than its human counterparts — but it shows off an impressive command of temporal information and conversational constraint.

I had a few additional goals which I haven't been able to achieve yet, but which still seem possible and useful. In particular, I wanted to explore how the simple nature of tense structure (being defined in terms of an occurence time, reference time, and utterance time) made it easy to learn. I wanted to teach, through something like near-miss learning, the transduction function that maps a question and the facts at hand onto the semantic qualities of its answer. Such advancements would make language-learning and evolution into a part of this project; I intend to include them in future incarnations of this work.

1.3 What you can do

You can download the source arrivals.clj, or use the web-based REPL below. » Open REPL in its own window.

1.3.1 Run the demo with run-demo

To run the demo once you're in the ai.logical.arrivals namespace,

simply call (run-demo) from the REPL.

(run-demo)

The program will run through an automatic sequence of questions (the ones shown in the introduction) to demonstrate its capabilities.

Once you have a taste for how the program works, you can try out questions or statements of your own using the methods in the next two sections.

1.3.2 Ask questions with ask

Asking a question is as straightforward as calling ask with your

question as a string argument. For example:

(ask "Will Ash have arrived when Bertie gets here?")

Note that the time of conversation is automatically set to before the party, and the conversation accumulates context until reset either manually or by conversational cues.

To set a new time for the conversation, use, for example:

(set-current-time! 3.5)

You can pass any real number as an argument. With this representation, one guest arrives at each positive integer between 1 and 9 — so 3.5 corresponds to the time after Charlie arrives but before Derek does, as in the second demo conversation.

To reset the conversational context, you can use

(forget!)

which causes the program to lose track of the current thread of conversation. Alternatively, to revert the entire program to its initial state (i.e. to reset the current time and the conversational context), you can use

(restart!)

This will return the program to its initial state, including the conversational time.

1.3.3 Generate English with say

To generate English with say, you pass a hash-map containing the

features you want in your reply.

The complete set of possibilities are:

- tense

- Any of the keywords

:past,:present, or:future. Assumes present tense by default. - aspect

- Either of the keywords

:perfective,:progressive. Assumes progressive aspect by default. - sense

- Only the keyword

:immediate, to indicate that the questions is whether someone will just have arrived, etc. - who

- The subject of the sentence, i.e. a string containing a name.

- negated

- If true, the response will have negative polarity (e.g. "isn't coming"). Otherwise, the response will have positive polarity (e.g. "is coming").

- reply-yes

- If true, says YES before replying. If false, says NO before replying. If missing or nil, just replies. (This is distinct from polarity, as you can reasonable say "YES; SHE'S NOT COMING." or "NO; SHE WILL BE COMING AFTER CHARLIE".

Here's an example:

(say {:who "Dylan", :negated true, :tense :future, :aspect :perfective })

;; DYLAN WON'T HAVE BY THEN